Triads…

Back in my early days at Microsoft – the late 1990s – groups ran on the PUM (Product Unit Manager) model. I was on the Visual C++ team, and our PUM was in charge of everything related to that team; he had reporting to him the heads of the three different disciplines at the time; a Dev manager, a test manager, and a group program manager (at some point it was decided that “program manager manager” or “program manager squared” weren’t great job titles).

Becoming a PUM was a high bar; you had to understand all three disciplines in detail, and you had to work well at the “product vision level”.

Technically, there were only PUMs if your team shipped a product; in other cases you had a GM (General Manager IIRC) with the same responsibilities.

This worked quite well; as a member of a team you knew who had the final authority and that person had a single thing to focus on. The buck stopped with that one person.

But there was a “problem”. The fact that there were limited PUM positions and the skills bar was high meant that many dev/test/pm managers weren’t able to move up to PUM positions.

That was a *good* thing; having interacted with a lot of discipline managers, most of them didn’t have the chops to be PUMs in my opinion.

But what is meant was that people were leaving to go to other companies. Some of that might of been Microsoft’s dogged insistence to only pay at the 50th percentile, but it was decided – in Office if my information is correct – that something needed to be done.

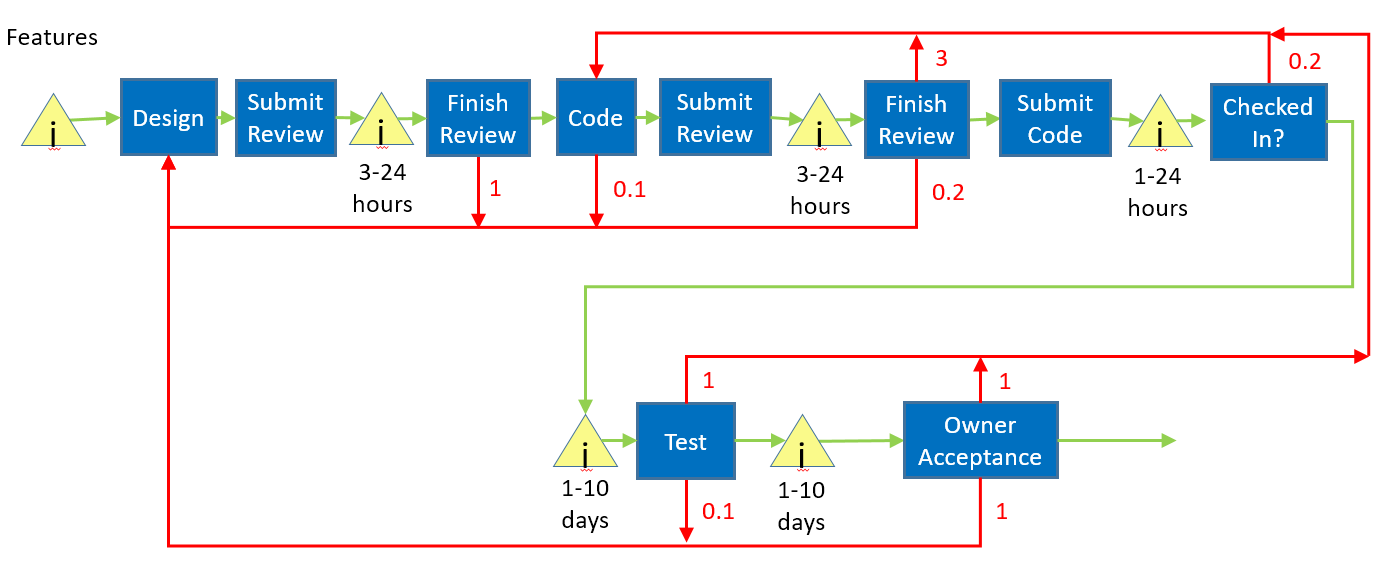

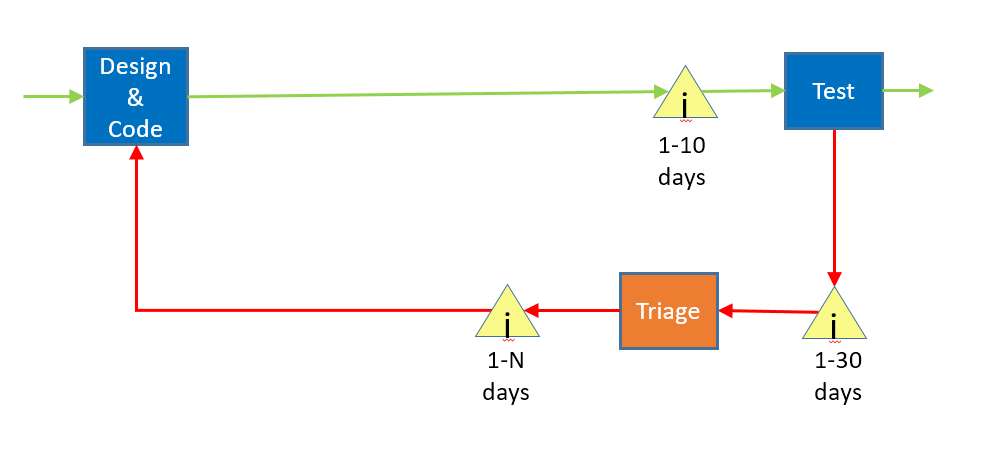

So, the triad was invented. Essentially the disciplines were extended up higher into the management hierarchy, with them only meeting when you to the VP level. That was 2 or 3 levels up depending on how you look at things.

This is obviously a really bad idea from an effectiveness perspective.

With the PUM model, everybody on a team – whatever the discipline – was working towards a coherent charter on what was important – a vision defined by the PUM.

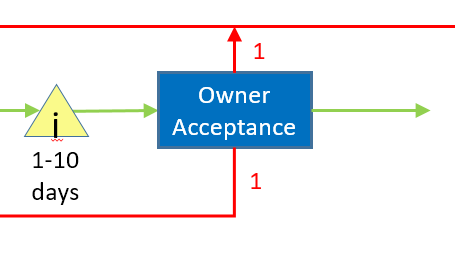

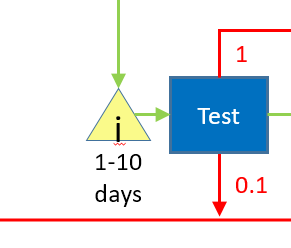

Get rid of the PUM, and now there are three sets of incentive structures at play. The dev manager is trying to do whatever the dev manager above him thinks is important – which is generally something like “ship features”. The GPM is trying to do what the PM manager above wants – something like “come up with flash features that make us look good”. And the test manager is generally just treading water to try to keep the product from not sucking at epic levels.

In the PUM model, those three had to cooperate to achieve what the PUM wanted, but that incentive went away, and what you typically saw was the managers just dividing the overall responsibilities and not really working on the bigger whole. That was the *best case*; the cases where the managers actively did not like each other were far less functional.

One of the triad teams that I was on, the dev manager had the most political capital, so all the devs got separate office at the good end of the building. The QA (sorry, “quality” at this point, since that group didn’t write tests) and PM orgs were at the other end of the building in open space. On a different floor.

And if you want to change something about how the disciplines worked together – like maybe put a dev team and the associated quality team near each other physically?

Isn’t going to happen. The dev manager wants to keep his space because it’s a power thing, and the only person that can mediate this disagreement is at least 2 levels up. None of the managers in between are going to support you even bringing it up with the VP because it would make them look bad, not that your VP is going to care at all about what is going on at that level; the feedback will be “just go figure it out”.

The separation existed at the budget level as well. So, devs get better machines than the quality team because their hierarchy had more power at budget time. You can’t even do morale events together because you have different morale budgets and the higher level managers want to allocate them differently.

As I said, it is a very bad idea from the perspective of effectiveness. If you looked at the skill levels of the higher-level managers, it was pretty pathetic. I went to an all discipline meeting once in around 2007 where the high-level manager was talking about this new thing that he found out that was going to revolutionize management.

Dashboards. It was dashboards. I figured that nobody in software management could get to 2007 without knowing about dashboards, but apparently I was mistaken.

So, why was it done and why did it continue so long?

Triads were great at one thing, and that was getting managers promoted up to partner levels. It’s great; there are 3x as many upper management positions, you need fewer skills, and – most importantly – there is *shared responsibility* in every area, so nothing is every your fault.

Managers are not playing the same game as ICs. They are playing the “get promoted, get powerful, make partner, make lots of money” game.

Recent Comments